How do you automate public cloud deployments

with Terraform, GitLab and Azure

As a cloud engineer I am automating public cloud deployments in order to reduce errors, save time and improve the quality principles of an organisation. For this you’ll need a set of tools and software. There are a lot of tools out there, but in this blog I’ll describe how to use tools like GitLab, Terraform and Azure. I will not go in full details of the different tools because the tools themselves have excellent documentation on how to use the software.

Cloud agnostic with Terraform

Solvinity is a multi-cloud provider, so for a cloud provisioning tool we have chosen Terraform as it is cloud agnostic. This makes collaboration with other cloud engineers, who work on different public cloud deployments, more convenient.

Terraform is not just a provisioning tool for public cloud by the way, it can also provision resources in VMware, Rundeck and a lot of other providers. Additionally, it’s possible to deploy resources to different public clouds simultaneously. Terraform enables us to write our Infrastructure as Code.

A customised CI/CD pipeline

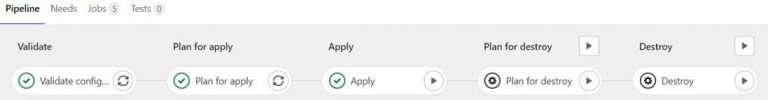

So Terraform is the tool to provision and deploy our resources to the cloud. But how can we automate this process? That’s where the CI/CD pipeline of GitLab comes in. The pipeline uses a GitLab runner that executes the stages and jobs we specify. Every stage consists of one or more jobs. In my example, these are the stages:

- Validate

- Plan for apply

- Apply

- Plan for Destroy

- Destory

In every stage there are different jobs. For example, a job in the Validate stage verifies and checks my code. If there is something wrong in my code, this stage will fail and send a notification. Furthermore, the other stages will not run if the Validate stage fails. Once my code is correct, it will move on to the next stage, which in this case is ‘Plan for apply’ .

We can tell the GitLab runner which stages need to run automatically and which stages need manual interaction or even approval from specified people. In the picture below you see an example of my pipeline in which I have been through three stages. The last stage of these three is ‘Apply’, which means I have deployed resources to Azure. If I want to delete the resources I deployed, I will have to manually click on the last two stages: ‘Plan for Destroy’ and ‘Destroy’.

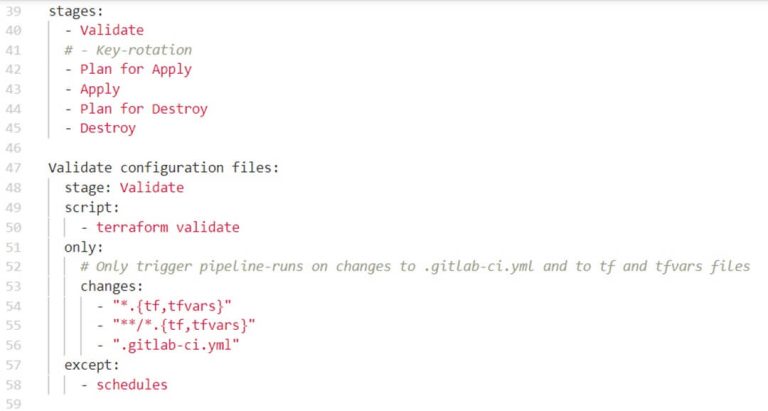

So how do you tell the GitLab runner what to do? This is done by a specific file within the repository. The .gitlab-ci.yml file is the pipeline configuration file. In this file we can tell the runner what stages and jobs it needs to do and in what order. Additionally, we can configure which stage triggers automatically and which one needs manual interaction.

Here’s an example of the .gitlab-ci.yml file:

The above example shows the different stages and the configuration of the Validate stage. The Validate stage has one line of code which is under script. This is a Terraform command and this will validate my Terraform code in the repository. Furthermore, this stage only triggers when changes are made to the specific file that are mentioned under ‘changes’. For instance, you don’t want the pipeline to run every time you make a change to the ‘readme’ file.

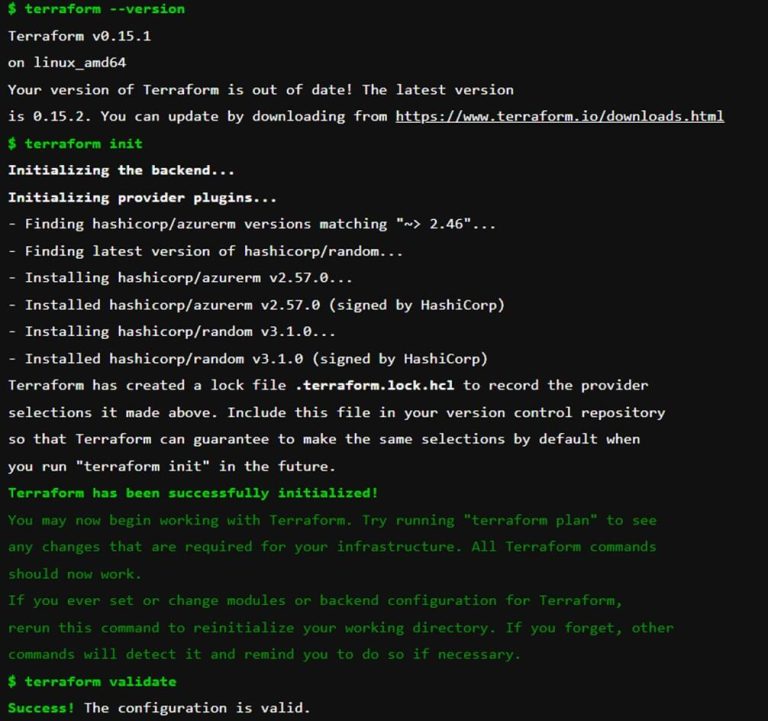

Everything the runner does, is shown within the jobs of the stage. Below is an example of the Validate stage and the output of the runner.

In the Terraform version we see, that Terraform is initialised correctly and that my code is valid.

If the ‘Validate’ stage has been passed, the ‘Plan for apply’ stage will automatically run. Terraform will display a plan of which resources will be added, changed or destroyed. I have configured that if this stage is passed, the next stage will not automatically apply the plan. For a production environment you may want other people to approve and check the plan before it actually gets deployed to Azure. So in my case, this should be a manual action. In test environments you can automatically apply this plan if you want to.

Deployment to the public cloud

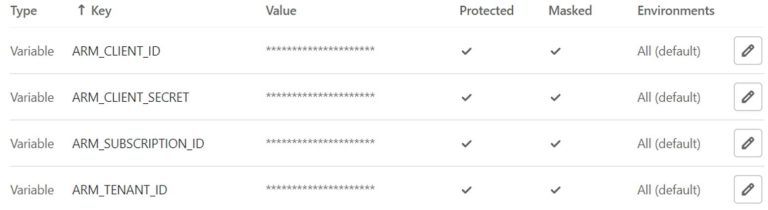

GitLab needs to authenticate to the public cloud. In my case I will use Azure as an example, AWS and GCP use something similar. The preferred way to perform authentication with Azure is using a Service Principle. The credentials of the Service Principle can be added as environment variables in GitLab. GitLab will use this Service Principle to authenticate to Azure and will be able to deploy resources.

In the picture below is an example of the GitLab environment variables you will need to authenticate to Azure. Gitlab will deploy to this Tenant and subscription with the credentials of the Service Principle.

Beware of the state file

An important part of Terraform is its state file. It is used by Terraform to map real world resources to your configuration, keep track of metadata, and to improve performance for large infrastructures.

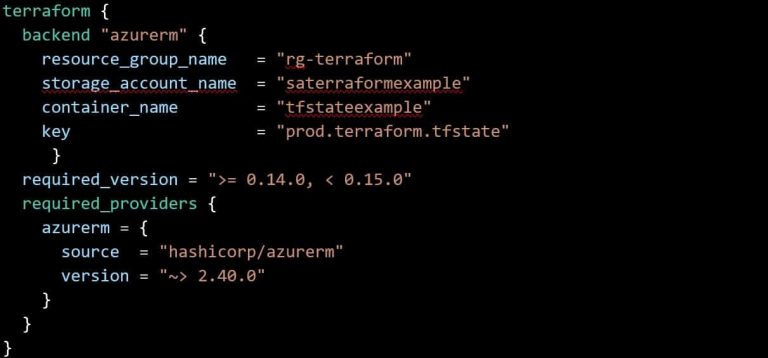

By default Terraform will store this file locally on your machine, but in a team environment you want to store this centrally in a remote data place. To store the state file remotely you will need some kind of storage account (Azure) or S3 bucket (AWS) where you can store the file and access it remotely. In the Terraform file you will need to point to that storage location. In Terraform it will look something like this:

In this example you can also see that I have configured the Terraform version to be between 0.14 and 0.15 so major changes won’t break my configuration. Additionally, I have configured the Azure provider because I will deploy to Azure and therefore use Azure specific code in my Terraform files. If you want to deploy to a different cloud or provider, you will have to specify these here.

Now that everything is in place, we can deploy resources to Azure with Terraform and our GitLab pipeline. And therefore, comply to the quality principles of the organisation, save time and reduce error by deploying resources in a controlled manner.

Sign up for the Solvinity Newsletter

Receive the latest news, blogs, articles and events.

Subscribe to our newsletter.

Other articles

More

Zero Trust: a practical mindset for effective digital security

Discover how the Zero Trust approach protects your organisation against future digital threats in this article.

Take Control of Your Security Strategy with the NIST Framework

Discover how the NIST Framework helps you structure your security approach and keep risks under control...

READ MOREWhat makes a Secure Managed Cloud truly ‘secure’?

What makes a Secure Managed Cloud truly ‘secure’? In an era where cyber threats are constantly...

READ MORE