Why cloud is more than “Just someone else's computer”

Recently, I had a conversation with someone at a Public Cloud community meet-up who made the commonly used statement: “There is no cloud! It’s just someone else’s computer.”. We had a good laugh about it, but there are still many that haven’t fully grasped the potential of cloud adoption yet and view the cloud as “just another data center” or “just someone else’s computer”.

While it is true that you can use platforms like Azure or AWS as a traditional datacenter, you can only harness the full potential of cloud by a) modernising your applications and b) plan a cloud strategy that aligns with your projected business outcomes on multiple levels, such as data innovation, financial and agility outcomes. Now, why do some individuals continue to refer to the cloud as merely another person’s computer, and what is the flaw in this assertion?

Evolution

The misconception that cloud is just someone else’s computer is incorrect for several reasons. Firstly, using the cloud as a datacenter will result in a “cloud bill shock”, the moment an organisation realises that the costs for their cloud services are higher than expected, due to the high cost of running on the cloud compared to on-premises or a traditional datacenter.

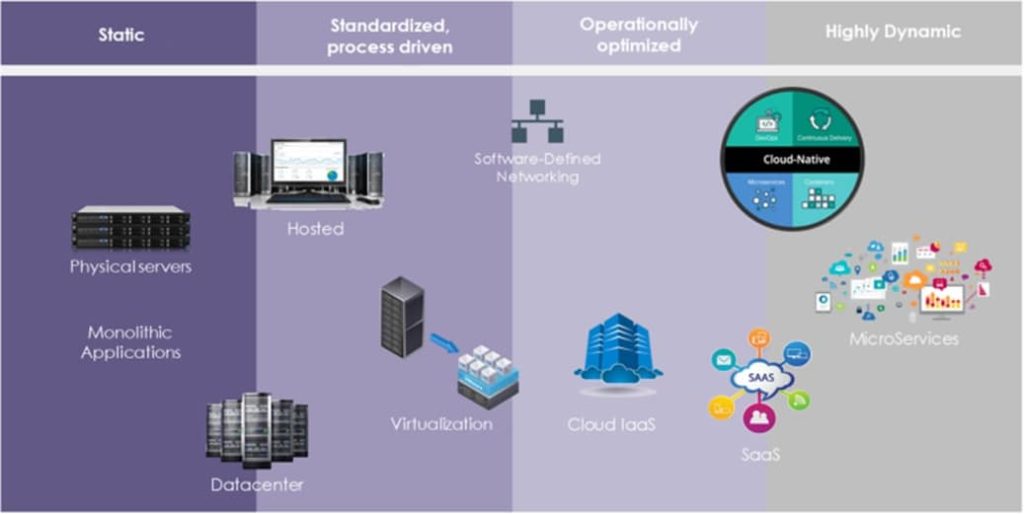

Secondly, the evolution of IT infrastructures has shown that the cloud is far more than just a datacenter. In the picture below you’ll find this evolution demonstrated.

From static times to standardisation and virtualisation

In the picture above you see the situation as it was prevalent up till the 90’s and 00’s; every office had a buzzing server room containing physical servers, commonly referred to as ‘pizza boxes’ at that time. Each server had a dedicated task in a static environment. As a result, every server utilised only 7% of its CPU cycles. Therefore theoretically, 14 physical servers are actually needed to do the job of one physical server.

The emergence of virtualisation technology, like VMWare, propelled us into a new era of efficiency. With the ability to run all 14 virtual servers on a single physical server, the underlying hardware was more efficiently used. Due to this virtualisation technology, exceptionally good business cases emerged for hosting providers. Highly secured data centers were filled with hardware and virtual servers were sold to customers, based on a standardised and process-driven IT platform.

However, the evolution of efficiency did not stop there.

Operational optimisation by David and Goliath

Operational optimisation has become crucial in managing complex IT environments with massive amounts of data and virtual servers. Every engineer knows: having Petabytes of data and managing thousands v(x)lans and virtual servers in multiple datacenters is no joke. It takes a lot of effort from a lot of engineers to manage and maintain them and keep them compliant to the highest security standards.

Solutions like Software Defined Networking and Infrastructure as Code have made these efforts less labor-intensive and more predictable through orchestration. However, traditional platforms still incur high costs to acquire (CapEx), upgrade, and maintain physical assets such as data centers and associated technology. For hosting providers, this requires deals based on long-term contracts and recurring revenue to cover the lifetime of these assets.

In contrast, public cloud providers like Google, Microsoft, and AWS offer pay-per-use models, allowing customers to pay only for the resources they use, by the millisecond. This model is not feasible for traditional hosting providers (David Ltd.)as it increases the risk of losing invested funds when business shrinks. The ‘Big Three’ (Goliath Ltd.: Google, Microsoft and AWS) do not have that problem as there is always someone asking for their services. They are true ‘giants’ which can offer this model due to their vast infrastructure of hundreds or even thousands of data centers across the globe, which opens the possibility to pay per second or per CPU cycle.

Highly dynamic whilst staying efficient

One of the cool things about the cloud is that we all benefit from Goliath as it enables its customers to reach the “Highly Dynamic” stage by modernising applications through ‘rebuilding’ or ‘rearchitecting’. By making use of the systems offered by cloud providers, such as paying per service or per CPU cycle, it is possible to make the operations cheaper, more efficient, secure (if you do it right!), and less labour-intensive.

A successful cloud strategy, for example that of Netflix, ensures that services are always available without a message like “Sorry, we provide maintenance tomorrow morning so no series available at that time”. It’s why we pay Netflix approximately 15 euros a month, instead of hundreds of euros. It has embedded ‘cloud-native’ in their DNA and is therefore always available. However, balancing cost-effectiveness and scalability is critical for success.

It is important to note that while Netflix was built cloud-native from the ground up, most organisations have to deal with existing monolithic application landscapes that must be converted to a highly dynamic, cloud-native landscape, which takes a lot of effort and engagement. Moving to a SaaS solution is the easiest cloud-native choice, but if that’s not possible or desired, application modernisation through rearchitecting and rebuilding is the most effective approach.

During the application modernisation process, it’s crucial to keep in mind that while scaling and paying per millisecond can be hugely beneficial, using them inefficiently or incorrectly can result in capital destruction. Reaching the “Highly Dynamic” phase alone is not enough; you must constantly evaluate efficiency to balance application resource needs with cost savings. To address these challenges, incorporating FinOps into your cloud strategy is essential.

Watch out for 'the mother of all pitfalls'

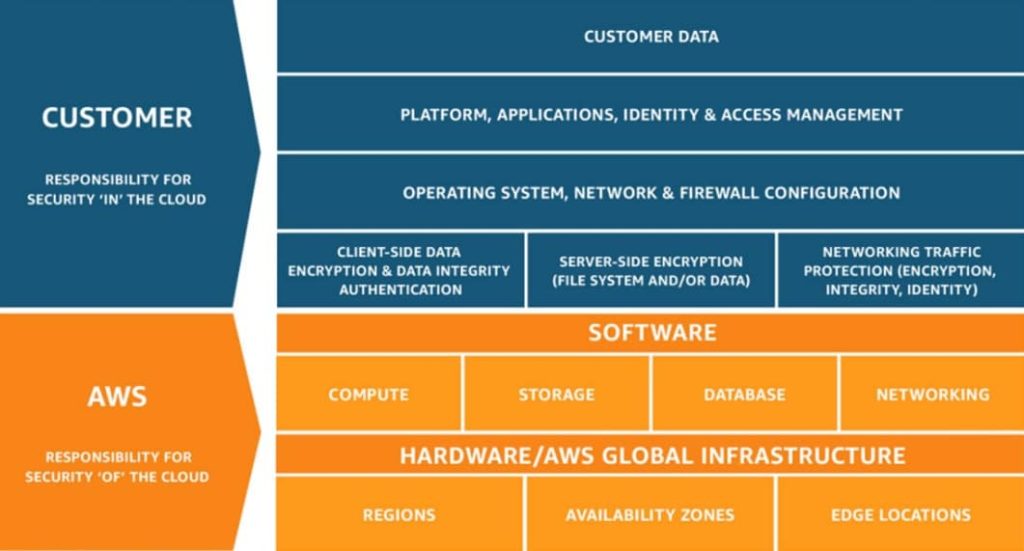

Cloud is exciting but keep this pitfall in mind: moving from an integral, managed hosting platform or an on-prem platform towards a cloud environment and misjudging responsibility. Thinking of AWS, Google or Microsoft as a hosting provider or IT department, assuming they take care of your security ‘in’ the cloud. And that is wrong. They take care of security ‘of’ the cloud, not ‘in’ the cloud. As an organisation moves its systems to a cloud infrastructure, responsibilities shift rapidly. AWS depicts this really clear in its shared responsibility model:

The customer organisation itself is responsible for its data, but also for all the platforms, applications, identity and access management, operating systems, network firewalls, data encryptions and security in their cloud environment. The responsibility in the blue area shifts to the customer organisation the moment it chooses cloud.

Cloud is a principle

So, coming back to my statement why the cloud is not just someone else’s computer, it is someone else’s CPU cycle in an ideal situation. However, the cloud is not only about technology; it’s a principle. For the sake of the length of this article, I have focused on the technological part of cloud adoption, but I could write books about the organisational (processes) and cultural (people) change which is required to extract the real value cloud can add to your business. Organisations must understand that making cloud adoption a success is not just about changing technology, but also culture and processes. And choosing SaaS over PaaS over IaaS.

More information about Public Cloud Security?

Download the whitepaper for free.

As cloud-specialist, we get a lot of questions regarding the ‘Public Cloud’. This whitepaper uses five ‘layers’ to cover the main points of concern and pitfalls for a securely set up public cloud environment

Other articles

More

Zero Trust: a practical mindset for effective digital security

Discover how the Zero Trust approach protects your organisation against future digital threats in this article.

Take Control of Your Security Strategy with the NIST Framework

Discover how the NIST Framework helps you structure your security approach and keep risks under control...

READ MOREWhat makes a Secure Managed Cloud truly ‘secure’?

What makes a Secure Managed Cloud truly ‘secure’? In an era where cyber threats are constantly...

READ MORE